Last weekend I decided to finally put some effort into investigating Clang, the C/C++/Objective-C frontend for LLVM. Clang is interesting as it is not only designed to provide efficient parsing and processing during compilation, but also as a platform for program analysis tools. Other options for static analysis of C/C++ exist e.g. GCC extensions, cscope hackery, and the various things built on top of CIL (e.g. Frama-C). However, Clang is attractive to me as it supports both C and C++, has a very permissive license, includes a well designed, extensive and documented API and is itself written in C++ (which might be a negative point depending on your view of the world but I like it :P). It also appears to have the backing of both Apple and Google, with a number of their engineers contributing regularly to both LLVM and Clang. All in all, it feels like a maturing project with a skilled and active community behind it.

Clang supports three approaches to writing analysis tools. Using libclang is the encouraged method for most tasks and is probably what you’re looking for if all you want to do is iterate over ASTs and pull out data. The next option is to write a plugin for Clang to be run during the build phase of a project. This is slightly more involved and doesn’t have the benefit of allowing you to use a Python/whatever wrapper around the API. However, if you want to do things like automated source rewriting, or more involved static analysis as part of a build, then this is where you want to be. The final approach is using libtooling. I haven’t looked into writing anything based on this yet but it appears to offer the benefits of writing a standalone tool provided by libclang with the power of full AST control offered by a plugin. The only potential downsides, in comparison to libclang, that I can see are that you cannot write your code in a higher level language and it does not have access to as convenient an API.

After a bit of digging around in documentation and source, I think libclang offers the most gentle introduction to Clang as a program analysis platform. It’s not as powerful as the other two methods but has a stable API (check out include/clang-c/Index.h for the C interface and bindings/python/clang/cindex.py for the Python bindings) and supports a nice visitor based approach for writing tools that traverse an AST. From reading over the above code, and following Eli Bendersky’s introduction to using the Python bindings, you should be able to get up and running fairly easily. As a side note, libclang has progressed somewhat since that blog post so the limitations related to missing cursor kinds are now largely alleviated. There are still some missing features but we’ll get to that later.

A relevant question about now is probably “What the hell has all of that got to do with interpreter fuzzing?”. In general, not a whole lot =) However, when trying to think of some small problem I could tackle to get a feel for libclang I recalled an issue I previously encountered when fuzzing PHP. When fuzzing an interpreter there are typically two high level stages that are somewhat unique to interpreter fuzzing; 1) Discover the functions you can call and 2) Discover how to correctly call them e.g. the number and types of their arguments.

In this regard, PHP is a slightly easier target than say, Python or Ruby, because on the first point it has a lot of global functions that can be called without instantiating a class or figuring out what module to import. These functions can be easily discovered using get_defined_functions. Using this approach it is simple to enumerate a fairly large attack surface that calls directly into the C backend (somewhere upwards of 500 functions in a default configuration) and get fuzzing.

Point 2 remains a problem however. Each PHP function implemented in the C backend takes a number of typed parameters. Some type conversion can and does take place, which means you can specify a boolean when an int is required or other such things. Naturally, there are some types that are completely incompatible, e.g. a function callback is required and you provide an integer type. If you get this wrong the function will bail out without performing any further processing. As well as incorrect argument types, specifying an incorrect number of arguments will also often result in the function execution terminating before anything interesting happens. Precise implementation details can be found in the zend_parse_va_args and zend_parse_arg_impl functions of Zend/zend_API.c.

When fuzzing PHP we are then left with a bit of a conundrum. We can easily discover a substantial attack surface but we still need to figure out the types and number of arguments that each function expects. One solution is to just play a numbers game. Fuzzing an interpreter is fast… really fast. It is also easily distributed and instrumented. So, if we just guess at the types and parameters we’ll probably be correct enough of the time [1]. This has a slight issue, in that there is going to be a bias towards functions that take a smaller number of arguments for purely probabilistic reasons. An improvement is to just parse the error messages from PHP which give an indication as to why the function bailed out e.g. incorrect types or incorrect number of arguments. This is all kind of ugly however and it would be nice if we could just know up front the correct argument types and the exact number required.

Enter Clang! An efficient solution for this problem is simply to parse every C file included in the PHP build, find all calls to zend_parse_parameters, extract the format string that specifies the arguments and their types and then relate this information back to the function names discovered via get_all_functions. It’s a fairly straightforward task on the PHP code base and could probably be achieved via regular expressions and some scripting. That said, using libclang we can come up with a much tidier and less fragile solution. Before I delve into the solution I should mention that I’ve uploaded the code to GitHub under the name InterParser, minus the fuzzer, should you want to try it out for yourself, extend it or whatever.

I’ll explain the code using questions for which the answers were not immediately obvious from the Clang documentation or are things that were interesting/surprising to me.

How do I run my libclang based tool on all files in a build?

The solution to this is also a solution to the next problem so I’ll deal with it there!

How do I know the correct compiler arguments to use when parsing a file?

The clang_parseTranslationUnit of Index.h allows you to specify the arguments that would be passed to the compiler when parsing a particular source file. In some cases it is necessary that you specify these arguments correctly or the code that is parsed will not accurately reflect the code that is included when you run make. For example, if a chunk of code is enclosed within #if BLA / #endif tags and BLA is supposed to be defined, or not, via the compiler arguments then unless you provide the same argument when creating the translation unit the code will not be parsed.

This problem, and the previous one, could easily be solved if we could tell 1) every file processed when make is run and 2) the command line argument passed to the compiler on each invocation. After browsing the Clang documentation for a solution a friend of mine suggested the obvious answer of just wrapping the compiler in a script that logged the relevant information during the build. The script creplace.py provides this functionality. By pointing the CC and CXX environment variables at it you should get a log of all files processed by the compiler when make is ran, as well as the arguments passed.

The ccargparse.py module provides the load_project_data function which you can then use to build a Python dictionary of the above information. If there’s an inbuilt Clang API to do all this I didn’t find it [2], but if not you should be able to just drop in my code and work from there! See the main function of parse_php.py for exact usage details.

Once the compiler arguments have been extracted and converted to a list they can just be provided to the index.parse method when constructing the translation unit for a given file. e.g.

comp_args = load_project_data(cc_file) args = comp_args[file_path] index = clang.Index.create() tu = index.parse(file_path, compiler_args)

How do I find all functions within a file?

Our first task is to find all calls to zend_parse_parameters and note the name of the top level backend API function so that we can later relate this to a user-space PHP function call. To do this we first need to find all function declarations within a translation unit for a source file. e.g the process_all_functions method:

for c in tu.cursor.get_children():

if c.kind == clang.CursorKind.FUNCTION_DECL:

f = c.location.file

if file_filter and f.name != file_filter:

continue

fmt_str = process_function(c)

This is a fairly simple task — we iterate over all children of the top level translation unit for the given source file and compare the cursor kind against FUNCTION_DECL, for a function declaration. It’s worth noting that the children of a translation unit can include various things from files that are #include‘d. As a result, you may want to check the the file name associated with the location for the child is the name of the file passed to index.parse.

How do I find all calls to a particular function?

For each function declaration we can then iterate over all of its children and search for those with the kind CALL_EXPR. This is performed in the process_function method. Firstly, we need to check if the function being called has the correct name. The first child of a CALL_EXPR AST node will represent the function itself, with the rest representing the arguments to this function.

The last statement isn’t quite correct. The C and C++ standards specify a number of conversions that can take place on a function call, so the direct children of a CALL_EXPR AST node will represent these conversions and then the direct child of these conversion nodes will contain the details we require. Clang does have a mode to dump the AST for a given source file so if you’re curious what the AST actually looks like try passing -ast-dump or -ast-dump-xml as parameters to the clang compiler.

As an example, on a function call we encounter a CALL_EXPR node and its first child represents the decay of the function to a pointer as specified by the C/C++ standards. This node will have the kind UNEXPOSED_EXPR. It will have a single child of type DECL_REF_EXPR from which we can retrieve the function name via the displayname attribute. The following code in process_function performs the function name extraction and comparison.

if n.kind == clang.CursorKind.CALL_EXPR:

unexposed_exprs = list(n.get_children())

func_name_node = get_child(unexposed_exprs[0], 0)

if func_name_node.displayname == "zend_parse_parameters":

...

How do I extract the parameters to a function call?

Once we know we have the correct function call we then need to extract the format string parameter that provides the type specification for the user-space API call. As with the node representing the function, arguments may also undergo conversions. The second argument to zend_parse_parameters is the one we are interested in, and it is usually specified as a string literal. However, string literals undergo a conversion from an rvalue to an lvalue. To account for this, we again go through an UNEXPOSED_EXPR node representing the conversion to access the node for the string literal itself.

Things get a little inconvenient at this point. Once we have the node representing the string literal we ideally want some way to access the actual value specified in the source code. It turns out that this functionality hasn’t been added to the Python bindings around libclang yet. Fortunately, a guy by the name of Gregory Szorc has been working on a branch of libclang and the associated Python bindings that adds the get_tokens method to AST nodes. His code is great but also contains a bug when get_tokens is called on an empty string literal. Until that gets fixed I’ve mirrored his cindex.py and enumerations.py files. Drop both into your clang/bindings/python/clang directory and you should be good to go!

The actual code to extract the string literal given the node representing it is then straightforward.

if tkn_container.kind != clang.CursorKind.STRING_LITERAL:

raise VariableArgumentError()

tokens = list(tkn_container.get_tokens())

if tokens[0] is None:

return ""

The check on the kind of the node is required as in a miniscule number of cases the format string parameter is passed via a variable rather than directly specified. This situation is so rare that it isn’t worth handling. The only other slightly odd thing is that when get_tokens is called on an AST node representing a string literal, like the first parameter in func("xyz", a), it will return a generator for two elements. The first will be the string literal itself, including the double quotes, while the second will represent the comma separator. I’m not sure if this is a bug or not but it’s not a big deal. If the string literal is empty then the generator will return None.

Conclusion

At this point we can associate the functions calling zend_parse_parameters with the format string passed to that call, and thus the types and number of arguments that the function expects. In a fairly small amount of Python we have a robust solution to problem 2, discussed earlier. A question you may have is How do you then associate the function name/argument specification from the C code to the corresponding user-space PHP function?. Well, PHP is nice in that it doesn’t do a massive amount of name mangling and consistently if we have a user-space function called func_one then the backend C function will be called zif_func_one (in the code it will probably look like PHP_FUNCTION(func_one) but Clang will expand the macro for you).

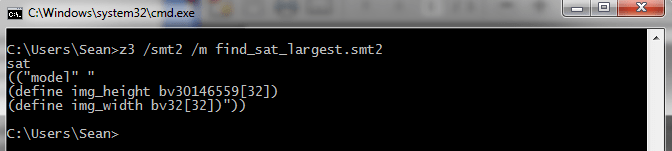

When parse_php.py terminates it will output something like the following:

INFO:main:API info for 518 functions in 68 files written to php_func_args.txt

Inspecting the output file we’ll see function to type specification mappings:

# /Users/sean/Documents/git/php-src/ext/standard/levenshtein.c zif_levenshtein ss sss sslll # /Users/sean/Documents/git/php-src/main/main.c zif_set_time_limit l # /Users/sean/Documents/git/php-src/ext/standard/versioning.c zif_version_compare ss|s ...

This tells us that a user-space PHP script can correctly call levenshtein with two strings, three strings or two strings and three longs. It can call set_time_limit with a single long only. version_compare can be called with two or three strings and so on and so forth. So, with this information at hand we can construct a PHP fuzzer that no longer has to guess at the number of arguments to a function or their types.

Extensions

The most obvious extension of this code that comes to mind is in pulling out numeric values and string literals from comparison operators. For example, say we’re analyzing a function with the following code:

if (x == 0xc0)

...

else

....

It might be an idea to pull out the constant 0xc0 and add it to a list of possible values for numeric input types when fuzzing the function in question. Naturally, you’ll end up with extra constants that are not compared with the function inputs but I would guess that this approach would lead to some gain in code coverage during fuzzing.

Resources

Introduction to writing Clang tools

Eli Bendersky’s blog post on parsing C with libclang

Unofficial Clang tutorials

[1] This is what I did when I first fuzzed PHP a long time ago. You hit a lot of incorrect type/argument count errors but you can get so many executions per second that in general it works out just fine =)

[2] Two days after I wrote this it seems that support for the compilation database functionality was added to the Python bindings. You can read about this feature here. Using this feature is probably a more portable way of gathering compiler arguments and pumping them into whatever APIs require them.